Emerging Technologies Shaping the Future of AI Hardware

A New Type Of Computing

With the last few years of exponential progress in AI technology, major winners have been the builders of AI hardware. This is because modern AI, mostly using neural network technology, uses computing power in a very different way from classical computers.

Instead of performing complex calculations with a powerful CPU, they instead perform thousands or millions of simpler calculations in parallel.

(You can learn more about how neural networks were invented and work in “Investing in Nobel Prize Achievements – Artificial Neural Networks, The Basis Of AI”)

So far, graphic cards, or GPUs (Graphics processing units), have been the favored tool to develop AI, dramatically boosting the revenues and profits of leaders in the sector like Nvidia (NVDA -0.14%).

The market for AI hardware is expected to keep growing exceptionally quickly, at 31.2% CAGR from 2025 to 2035

Source: Roots Analysis

This period should also see the emergence of many new types of AI hardware, as the GPUs repurposed for AI calculation are progressively replaced by chips designed specifically for this application.

In the long run, more exotic forms of computing are likely to make their way into the AI hardware market, from application-specific designs to non-silicon chips or even using actual biological neurons.

How AI Thinking Works

The fundamental difference between classical supercomputers and AIs is how data is processed. Instead of solving complex calculations, neural networks create virtual nodes connected into a network. While the initial neural network contained barely a few dozen nodes, making a few hundred connections, modern neural networks like the ones used by ChatGPT use trillions of possible connections, reaching levels of complexity not dissimilar to the human brain.

Source: Nobel Prize

This different method of calculus requires hardware able to perform millions of operations in parallel, even if the computing power dedicated to each is relatively small.

Luckily, this is a type of hardware that has already been in operation for many years, such as graphic rendering using GPUs, mostly for 3D simulation and videogames, and also uses this type of many small calculations in parallel.

This is why the initial (and current) winner of the race to secure enough AI chips has been Nvidia, the leader in the GPU market.

Ever Quicker GPUs

With the invention of more efficient algorithms and the quick progress in artificial intelligence they created, the potential applications of AI exploded in the 2020s.

This led to an ever-increasing race to secure enough hardware, especially Nvidia GPU in 2023.

In parallel, increasing expectations from AI potential applications require ever smarter AI, which itself requires more computing power. And while securing more GPUs was a solution, better GPUs were needed as well.

The industry delivered, with a 1,000x growth in performance in less than 8 years.

Source: NVIDIA

Can It Last?

There are signs that progress in GPU performances might soon slow down. First, all the “easy” improvements, like making GPUs bigger and with smaller and denser transistors, are getting maxed out. So, further improvement must come from more radical redesigns and innovations.

Secondary problems are also creeping on the industry. For example, much denser and more powerful GPUs are producing a lot of waste heat, to the point that more of the same would just melt the chips.

This waste heat is also indicative of a lot of wasted power. Securing a sufficient supply of stable, baseload power is becoming an issue for AI companies, with all Big Tech companies rushing to secure supply from nuclear power plants.

Lastly, AI computing is becoming more specialized, with different methodologies emerging at different companies and for different applications, each having different requirements regarding its hardware. So most likely, the era of all-purpose GPUs used by all AI firms is coming to an end, even if slowly.

The Super GPU Era

Until now, most AI data centers have consisted of linking together thousands of GPUs into dedicated servers.

The immediate fix to the growing overheating and power consumption problem is going to be the building of integrated hardware that goes beyond just accumulated individual GPUs.

A major step in that direction is the recent release by Nvidia of GB200 NVL72. This hardware is designed to act as a single massive GPU, making it a lot more powerful than even the previously record-breaking H100 model.

This should also be a lot more energy efficient, a crucial point as the AI industry might fall short on energy before being short on chips at the speed at which AI data centers are built. And more computing & energy efficiency mean less waste heat, which temporarily solves the overheating issue as well.

Source: Nvidia

Currently, CoreWeave has become the first cloud provider with generally available Nvidia GB200 NLV72 instances.

(You can read more about CoreWeave and its providing of AI cloud computing to companies developing AI solutions in “CoreWeave: The Cloud AI Hyperscaler”).

Non-GPU AI Hardware

Field Programmable Gate Arrays (FPGAs)

Another type of hardware used for AI development is Field-Programmable Gate Arrays. FGPA are integrated circuits that can be reprogrammed to perform specific tasks more efficiently. They work by having configurable interconnects between their components.

Source: Microcontrollers Labs

This can make FPGAs more flexible than GPUs and have more potential for optimization at specific types of calculations.

FPGAs are also more power-efficient than GPUs. Their low latency (quick reaction time) also makes them very efficient for real-life applications requiring quick reactions.

However, FPGAs are also less able to handle very demanding calculations requiring a lot of power.

Another issue is that while FPGAs are re-programmable, this can be a time and labor-intensive process. So they can be much slower to design, build, and program. This can be a serious issue in a field that is making revolutionary progress every 6 months.

This means that so far, FGPAs use cases have been concentrated on certain applications of AI, with general development still relying on less efficient, but all-purpose GPUs:

- Real-time processing: When incoming data needs to be treated quickly, like for digital signal processing, radar systems, autonomous vehicles, and telecommunications.

- Customized hardware acceleration: Configurable FPGAs can be fine-tuned to accelerate specific deep learning tasks and HPC clusters by optimizing for specific types of data types or algorithms.

- So modern AI data centers might progressively become a mix of GPUs and FPGAs, with each hardware dedicated to the sub-tasks it can perform best.

- Edge computing: This is moving compute and storage capabilities closer locally to the end-user, for example, directly in a car or a drone. In these cases, FPGA’s low power consumption and compact size are advantages.

Application-Specific Integrated Circuits (ASICs)

ASIC systems are built for specific calculation types and are only able to perform these. So it is not so much a computer chip as a pure integrated circuit; FPGAs are sometimes also called programmable ASICs.

The reason to use ASICs instead of FPGAs or GPUs is that ASICs are much quicker than any other logic device. They are also more energy efficient and smaller in size.

This is why, for example, ASIC miners are used in cryptocurrency mining, with the design optimized to perform the specific type of calculation required.

Source: Wevolver

However, ASICs are also a lot more complex to design, so it only makes sense to develop one for a task that will be repeated often enough to cover the design costs. Overall, ASICs make economic sense only when considered for mass production.

Custom designs also require expert knowledge, and widely used programming languages and libraries are potentially not usable.

This need for custom design can, however, be an advantage as well, as it creates additional intellectual property (IP) protection.

The use cases for ASICs are similar to FPGAs: edge computing, image recognition, telecommunications, and signal processing, but even more than FPGAs require an often repeated calculation.

Because they are not flexible, they are also unlikely to be sufficient by themselves for any complex AI tasks. They might, however, be integrated into an AI hardware system to give it more efficiency and speed.

Notably, companies like Etched are developing ASICs specifically design to perform transformers calculation (the “T” in chatGPT).

Source: Etched

Neuromorphic Chips

Beyond just improving GPUs or building more efficient ASICs, other concepts are looking at completely changing how computing is performed.

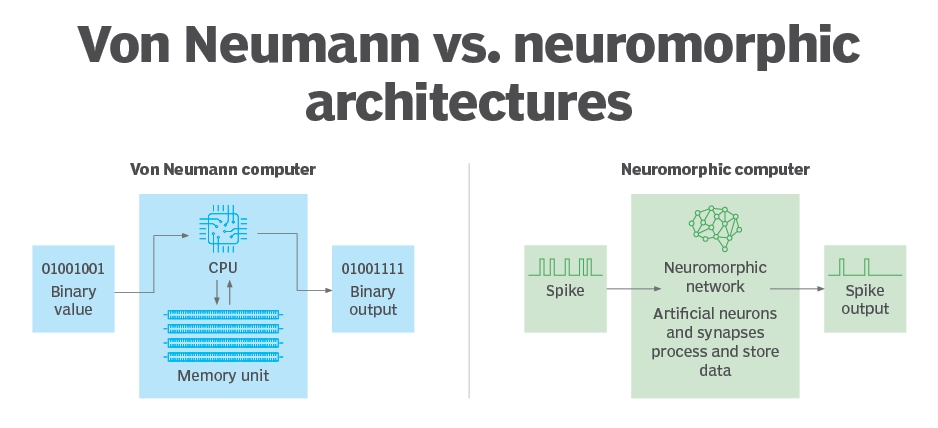

One idea is that if we are designing a neural network, we should have a chip architecture that reflects it.

This is the idea of neuromorphic chips, which work like interconnected neurons at the hardware level instead of simulating it through complex mathematical equations and millions of parallel calculations.

These devices are also sometimes called NPUs (Neural Processing Unit), usually combining many different types of components into one unit.

Neuromorphic chips often use complex electrical signals, closer to analogic data. This differs from “normal” computers using a Von Neumann design with binary signals (0 & 1).

Source: Tech Target

Many methods are currently being explored in how to create neuromorphic chips:

Photonics Chips

Another option to bypass many of the current limitations of silicon hardware is to switch to photonics computers. Instead of electrons carrying the information, it is photons from lasers that do.

This method carries the advantage of being a lot less vulnerable to overheating and of light being the fastest moving thing in the Universe.

For now, the durability of photonics components, notably memory, has been an issue, but this is changing quickly.

Other recent progress in photonics includes:

Using Actual Neurons

Another way to further push AI to reach similar performance to the actual brain is maybe simply to use brains in the first place.

After all, if a neural network made of actual neurons can be highly adaptative and excel at pattern recognition in nature, why would it not work in a more artificial context?

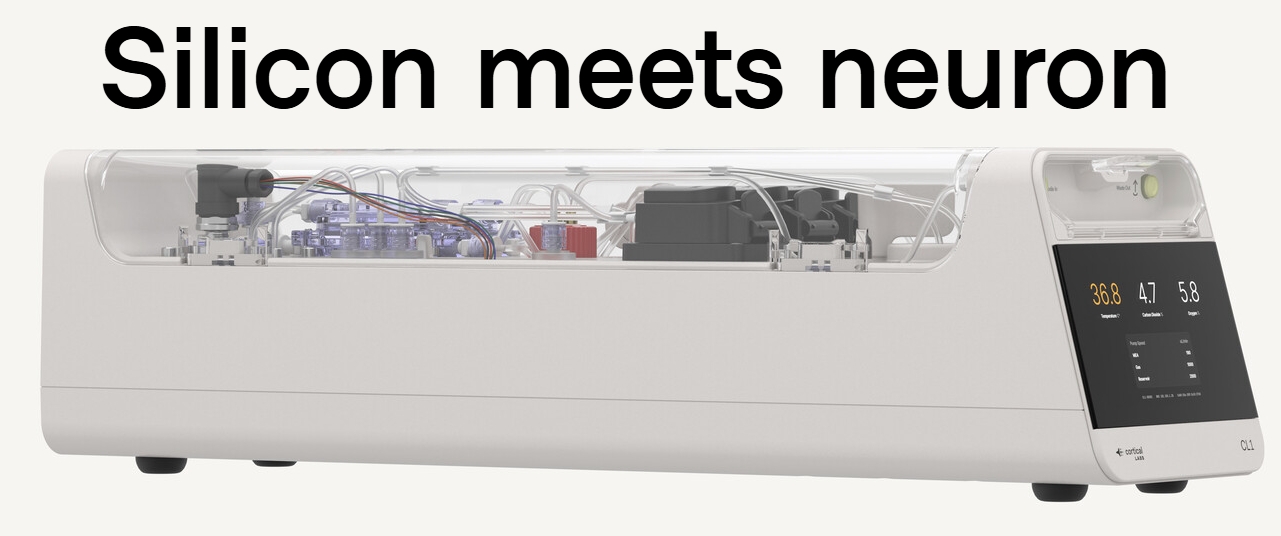

In March 2025, this concept moved further with the release by Cortical Labs of CL1, the first Synthetic Biological Intelligence (SBI).

Source: Cortical Labs

Real neurons are cultivated inside a nutrient-rich solution, supplying them with everything they need to be healthy. They grow across a silicon chip, which sends and receives electrical impulses into the neural structure.

This genuinely blurs the line between artificial and natural intelligence. Is a computer made of actual neurons still artificial?

We integrate the neurons into the biOS with a mixture of hard silicon and soft tissue. You get to connect directly to these neurons. Deploy code directly to the real neurons, and solve today’s most difficult challenges.

The neuron is self-programming, infinitely flexible, and the result of four billion years of evolution. What digital AI models spend tremendous resources trying to emulate, we begin with.

This could be a true breakthrough if some theories considering the human brain as more of a quantum computer than an electrical system prove true.

While still very much an emerging technology, it could receive a boost with the progress made in afferent fields, like 3D printing of human organs, including functional brain tissue, as well as the production of organoids (mini-brains) by companies like Final Spark and BiologIC.

Brain-Computer Interface

Another way to boost AI hardware could be to use it in direct interface with another type of biological supercomputer: the brain already in our skull and doing our thinking.

At first, Brain-Computer Interfaces (BCI) are likely to be mostly used to help people suffering from neurological conditions.

New methods to make the implants more durable and less invasive should help as well.

In the long run, this, too, could blur the line between machine and human intelligence. If you are thinking something with the assistance of an AI in direct interaction with us through BCI, who did the thinking exactly?

However, due to ethical and safety concerns, this is likely one of the most distant technologies, despite the anticipation of science fiction works like Altered Carbon, Neuromancer, or Cyberpunk 2077.

(We covered the most prominent companies in the field in “5 Best Brain-Computer Interfaces (BCI) Companies”).

How Much Compute Is Truly Needed?

DeepSeek

For many years, every AI company has been racing to add as much computing as fast as possible, assuming that more is always better.

This has been challenged by the brutal arrival of Chinese AI company DeepSeek. With a model 10-100x more efficient than its competitors, and costing only 3%-5% for equivalent or superior performance, DeepSeek has challenged the requirement for more computing power to build better AIs.

A big reason why DeepSeek focused so much on model efficiency was the limited access of Chinese AI firms to AI chips. This was clear when it was soon after the launch revealed that AI models from other Chinese firms performed as impressively: Alibaba’s Qwen, Moonshot AI’s Kimi, ByteDance’s Doubao, or Baidu’s Ernie Bot.

As the saying goes, “Necessity is the mother of invention”.

It is likely that in the upcoming years, a dual focus on better performance and more efficiency will dominate the AI industry.

However, this is not to say that more computing will not deliver better results. Simply, this is not the only path to success in the AI industry, and very well-funded companies like OpenAI might have been a little lax with their spending.

For now, most AIs have been work-in-progress and experimental designs with only limited applications. The more they are integrated into the workflow of millions of companies worldwide, the more AI computing will be required.

And the more computing is needed, the more the demand for more efficient and less energy-intensive hardware.

So like for traditional computing, we can assume that the market will still be demanding ever-improved chips, especially as AI might soon become one of the world’s largest energy consumers.

EdgeAI & AI PCs

Another effect of the emergence of DeepSeek and other AI Chinese models with less compute requirements is that AI models, especially “distilled” ones, can now run on high-end individual machines, instead of gigawatts-scale giant AI data centers.

This vindicates the proponents of EdgeAI, arguing that a lot of AI computing needs to be done “on-site”, instead of only in the cloud.

As DeepSeek is an open-source model, this also opens the way for many individual experiments with AI computers: powerful enough to run AI models, but cheap and small enough to be bought by individual people. Overall, this leads not so much to less AI compute demand, but maybe a more decentralized process for developing and using AI applications.

So AI PCs are likely to be the new big trend in PC building, with the specialized press already discussing in detail the question and which brand is best especially as AI is making its way into most tech companies offering, including Microsoft (MSFT -1.17%), Copilot becoming almost omnipresent in Windows OS and other software of the company.

Conclusion

From gaming GPUs, the hardware for AI is evolving quickly. This has started with GPUs designed for AI and is evolving with “super GPUs” designed from scratch to fit into AI data centers with lower energy consumption and heat production.

The next step is likely further integration of generalist GPUs with ASICs and FGPAs chips to perform specific AI tasks with less power and space requirements.

Further down the road, the bets are on on which technology will be the winner for AI computing: neuromorphic chips, photonics, spintronics, or even actual biological neurons trained to interact with silicon substrates, and maybe AI interacting directly with our own brains through direct interphases.

For all these technologies, it is certain that the explosive growth in AI’s capacity and the associated explosive growth in applications will keep the demand for more and better AI hardware growing.

So even if more efficient AI models arrive on the market, like DeepSeek, the sudden rush of demand for AI PCs could be a good indicator that AI hardware will likely stay in somewhat short supply compared to demand in the coming years.

A Leader in AI Hardware

Nvidia

NVIDIA Corporation (NVDA -0.14%)

NVIDIA has evolved from a niche semiconductor company specializing in graphic cards to a tech giant at the forefront of the AI revolution and the massive amount of hardware it needs.

This was achieved through the development of CUDA, a general-purpose programming interface for NVIDIA’s GPUs, opening the door for uses other than gaming.

“Researchers realized that by buying this gaming card called GeForce, you add it to your computer, you essentially have a personal supercomputer. Molecular dynamics, seismic processing, CT reconstruction, image processing—a whole bunch of different things.”

This wider adoption of GPUs, and more specifically NVIDIA hardware, created a positive feedback loop based on network effects: the more uses, the more end users and programmers familiar with it, the more sales, the more R&D budget, the more acceleration in computing speed, the more uses, etc.

Source: Nvidia

Today, the installed base includes hundreds of millions of CUDA GPUs.

Another remarkable thing about the evolution of AI computing power is that it follows an exponential law instead of the more linear Moore’s Law for CPU. This is because not only is the GPU hardware getting better, but the required processing power has decreased over radical improvement in how neural networks are trained.

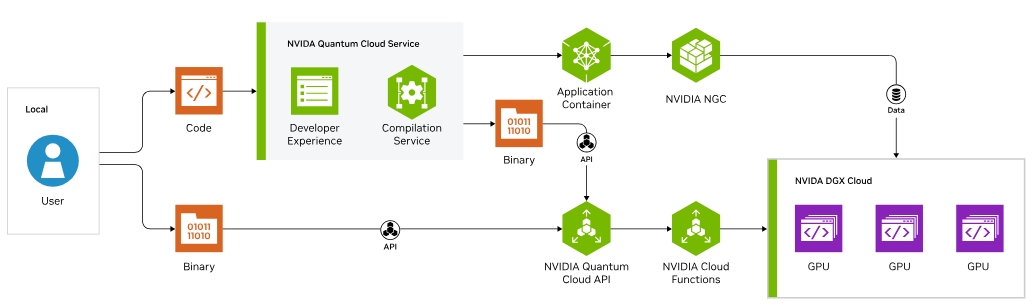

While a leader in GPU and AI, NVIDIA is also very active in developing quantum computing into a new growth engine.

Similar to how it deployed CUDA for neural network applications, Nvidia has released CUDA-Q for quantum computing, offering a quantum cloud system where you can rent NVIDIA quantum computing capacity through a cloud service.

Source: NVIDIA

This also includes technology like NVIDIA’s cuQuantum for researchers to emulate quantum computers, cuPQC for quantum encryption, and DGX Quantum for the integration of both classical and quantum computing.

Overall, NVIDIA is at the forefront of building a quantum computing ecosystem, capitalizing on its position as a leader in AI and AI hardware.

Source: NVidia

With a strong position in AI, crypto mining, and maybe soon quantum computing, Nvidia is very well positioned to be one of the dominant computing hardware companies in the upcoming decade.

You can read more about Nvidia’s history, business model, and innovations in “NVIDIA (NVDA) Spotlight: From Graphics Giant to AI Titan“.

Latest on NVIDIA

link